A new kind of adapter helps LLMs get their words out faster

IBM Research has modified the traditional low-rank adapter, or LoRA, to give LLMs specialized capabilities at inference time without the delay. A set of task-specific, inference-friendly adapters are now available on Hugging Face.

Low-rank adapters, or LoRAs, are a fast way to give generalist large language models targeted knowledge and skills so they can do things like summarize IT manuals or rate the accuracy of their own answers. But calling on LLMs augmented with LoRAs can quickly bog down their performance.

That’s because when you switch from a generalist foundation model to one customized using LoRA, the customized model must reprocess the conversation up until that point, creating computation and memory costs that can lead to long runtime delays.

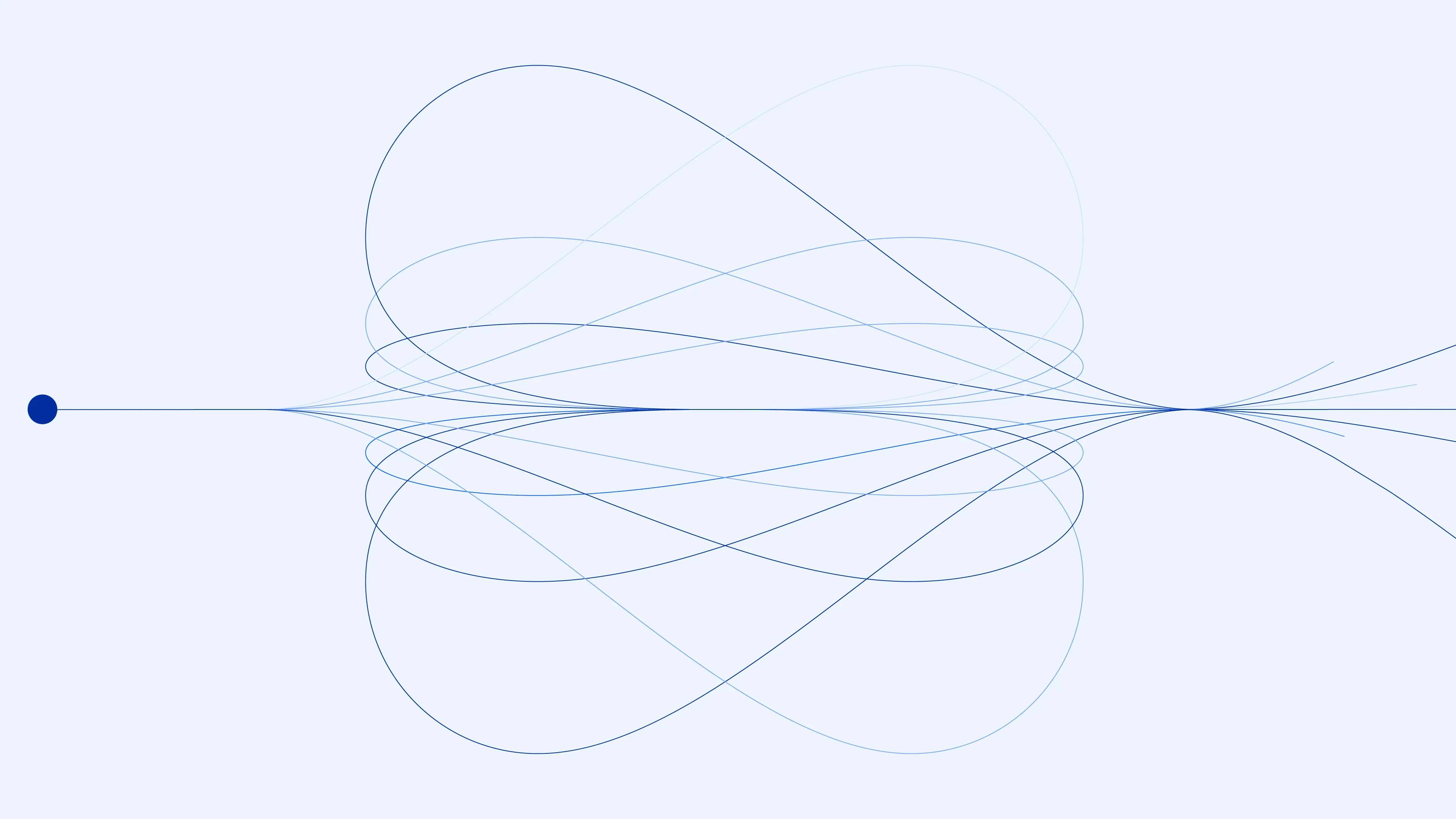

IBM Research has come up with a way to cut the wait. It’s called an “activated” LoRA (or “a” LoRA for short), and it essentially allows generative AI models to recycle the computation they already performed and stored in memory so they can output answers faster at inference time. The ability to pivot quickly from one task to another is becoming more important as LLM agents become more popular.

IBM’s aLoRAs can be called in for specialized tasks, just like plain old LoRAs. But at inference time, aLoRAs can simply focus on existing embeddings already computed by the base model. As their name suggests, aLoRAs can be “activated” separately from the base model at any time, and without additional costs, because they can reuse embeddings stored in key value (KV) cache memory.

“LoRA has to run all the way back to the beginning of a long conversation and recompute it, while aLoRA does not,” said Kristjan Greenewald, the IBM researcher leading the aLoRA project.

IBM researchers estimate that an activated LoRA can accomplish individual tasks 20 to 30 times faster than a traditional LoRA. Depending on how many aLoRAs are summoned, an end-to-end chat could unfold up to five times faster.

“Efficiency matters when you’re trying to get models to do things quickly,” Greenewald added. “Inference-time costs add up in dollars and delays.”

ALoRA: An AI “function” called at runtime to streamline inferencing

The idea for a LoRA that could be activated on its own, without the base model, came out of IBM’s ongoing work to speed up AI inferencing. LoRA adapters have emerged as a popular alternative to conventional fine-tuning because they provide a way to surgically inject new capabilities into a foundation model without the high cost of updating each of the model’s weights. With an adapter, 99% of the customized model’s weights stay frozen.

But while LoRAs have dramatically lowered customization costs, they can drag down inferencing speeds. That’s because their adapted weights must be applied to both incoming queries from the user and the model’s generated responses, creating a lot of extra computation.

IBM researchers wondered if they could cut out some of the work by applying the adapted weights to the generation step only. In traditional software, statically linked computer programs can execute tasks they weren’t explicitly built to execute by dynamically loading an external software library of pre-existing compiled code and calling the relevant function.

To get an AI adapter to work like a function, however, researchers had to figure out how to run it without the task-aware embeddings representing the user’s request. Without the benefit of embeddings tailored to the user’s goal, their first few activated-LoRA prototypes failed to match the accuracy of regular LoRAs.

But they eventually found a way to compensate — by increasing the rank of the adapter. With increased network capacity, the adapter could now extract more contextual clues from the general embeddings. In a series of tests, researchers confirmed that their “aLoRA” could now perform on par with a traditional LoRA.

“Across a variety of applications, we saw that aLoRA-customized models could now generate text as well as those customized with standard LoRAs,” said Greenewald. “We could get their runtime benefits without the accuracy loss."

An AI “library” of experimental adapters

IBM Research is releasing a library of new aLoRA adapters for its Granite 3.2 LLMs, aimed at improving the accuracy and reliability of RAG applications. Experimental code to execute the adapters is also available as researchers work on implementing them in vLLM, the open-source platform for serving AI models efficiently. IBM is separately releasing a set of standard Granite 3.2 adapters for immediate use in vLLM. Some of the task-specific LoRAs are updates of the one IBM released last year through Granite Experiments.

One of the new aLoRAs can rewrite queries in a conversation to make it easier to search for and retrieve key passages. Another can determine if a query can be answered based on the retrieved documents, reducing the risk that the model might hallucinate an answer. A third can estimate how confident the model is in the accuracy of its answer, signaling to users when they should double check their facts.

Beyond RAG, IBM Research is releasing exploratory adapters that can flag attempts to jailbreak, or bypass, an LLM’s safety controls, as well as check whether LLM outputs meet a set of user-defined standards.

Test-time scaling — for agents and beyond

LLM performance has been shown to improve dramatically if more compute is spent at runtime to both evaluate and improve the model’s initial responses. IBM Research recently improved the reasoning capabilities of its Granite 3.2 models by introducing several methods to review LLM candidate responses beneath the hood, at test-time, and to select the best one to output.

IBM Research is exploring whether aLoRAs can provide a similar performance boost in what has been alternately called “test-time” or “inference-time” scaling. An adapter could be designed, for example, to generate multiple answers to a query, and select the answer that combines a low score for hallucination risk with a high confidence score for accuracy.

The next frontier in AI involves agents, and researchers want to see if inference-friendly adapters can have an impact here, too. AI agents have been shown to do well at mimicking human reasoning when a complex task is broken into discrete steps for the LLM agent to tackle one by one.

Each of these steps may require specialized models, both to implement and evaluate them, either by the model itself or another. This is where lightweight aLoRAs could really shine, said Luis Lastras, director of language technologies at IBM Research.

“Thanks to their unique architecture, we could potentially see huge improvements in runtime performance,” he said.

Related posts

- Q & AKim Martineau

LLMs have model cards. Now, benchmarks do, too

ReleaseKim MartineauBoost your tools: Introducing ToolOps, the tool lifecycle extension in ALTK

Technical noteHimanshu Gupta, Jim Laredo, Neelamadhav Gantayat, Jayachandu Bandlamudi, Prerna Agarwal, Sameep Mehta, Renuka Sindhgatta, Ritwik Chaudhuri, and Rohith VallamIBM Granite tops Stanford’s list as the world’s most transparent model

NewsPeter Hess