Building the IBM Spyre Accelerator

IBM Spyre is available later this month for IBM z17 and LinuxONE 5 systems, and in December for Power11. Here’s how its journey began in the AI Hardware Center at IBM Research.

IBM is keeping businesses on the cutting edge with Spyre, an AI accelerator chip purpose-built to handle the unique requirements of artificial intelligence computing. AI has rapidly grown from a burgeoning technology a decade ago to now being table stakes for enterprises looking to compete in today’s marketplace. As such, performing low-latency AI inference functions while keeping proprietary data secure is essential.

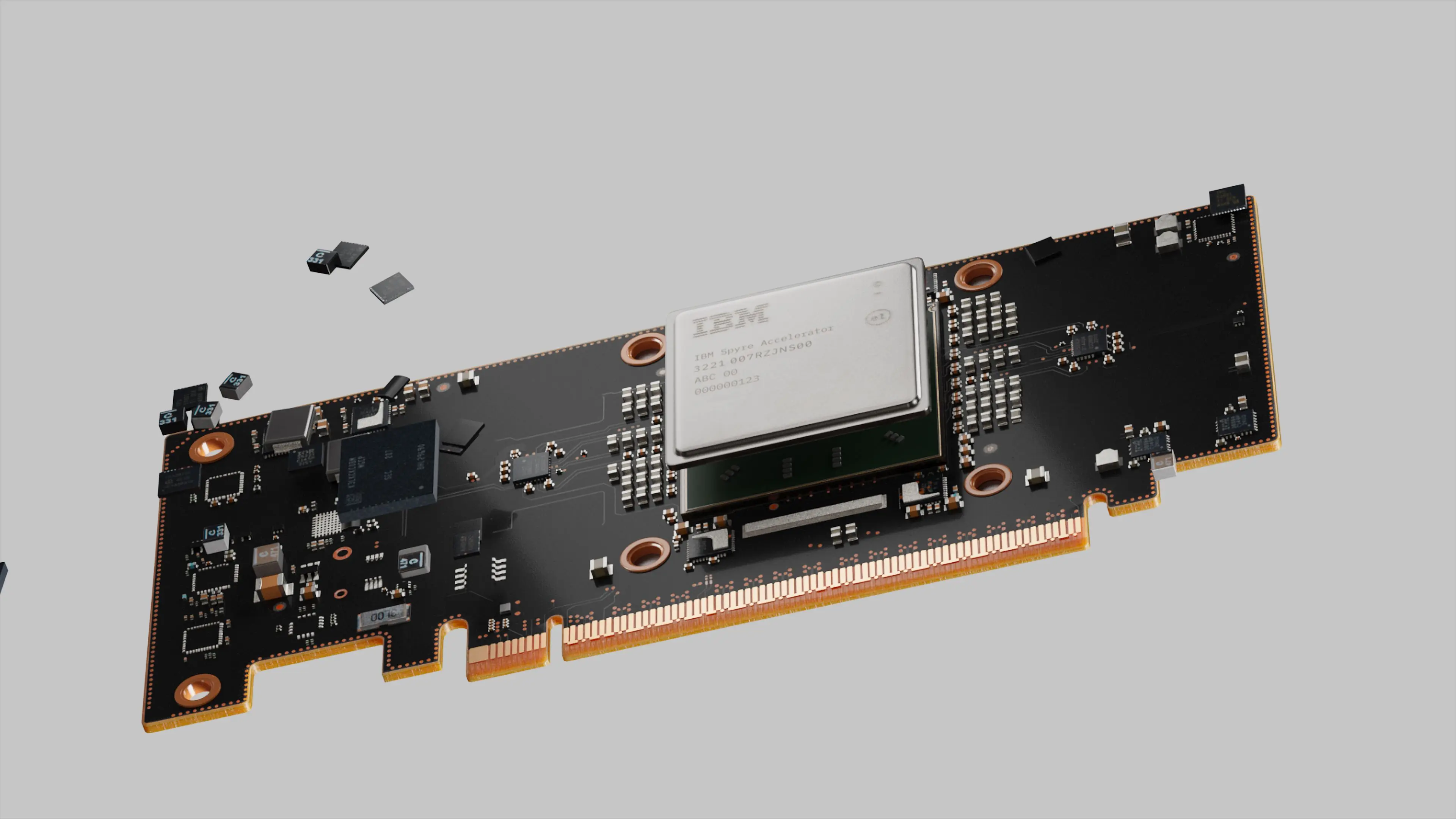

Today IBM announced the general commercial availability of a product that will do just that: the Spyre Accelerator for IBM z17, LinuxONE 5, and Power11 systems. This powerful system-on-a-chip will be available for IBM z17 and LinuxONE 5 systems on October 28, and for Power11 servers in early December.

Spyre’s release punctuates a decade of innovation from IBM Research staff in the AI Hardware Center, founded in 2019. The group, whose concept started as a hallway conversation about low-precision computing in 2015, has now yielded an AI chip with 32 individual accelerator cores and 25.6 billion transistors. The Spyre PCIe card design makes it possible to cluster up to 16 cards in an IBM Power11 system and 48 cards in an IBM z17.

Conventional CPUs and GPUs struggle to muster the scale at the efficiency needed for modern AI workloads. It’s one of the driving reasons that the AI Hardware Center is focused on creating next-generation chips, systems, and software that are up to the task. Spyre, designed to run generative and agentic AI workloads on-premises, builds on lessons from the earlier Telum processor’s AI core in IBM z16, with a strong emphasis on software enablement to fully unlock hardware capabilities.

Even before the AI Hardware Center’s formal launch, IBM researchers pioneered low-precision deep learning — demonstrating 16-, 8-, 4-, and even 2-bit training and inference with minimal accuracy loss. This foresight positioned IBM to anticipate AI’s hardware needs before the advent of transformers and the Cambrian explosion that followed.

Read more here about how the Center’s collaborative model brings together IBM business units, academic institutions, and industry competitors to co-develop what’s next in full-stack AI solutions.

Related posts

- Q & AKim Martineau

From atoms to chips: Thermonat models heat with unprecedented accuracy

NewsPeter HessLLMs have model cards. Now, benchmarks do, too

ReleaseKim MartineauBoost your tools: Introducing ToolOps, the tool lifecycle extension in ALTK

Technical noteHimanshu Gupta, Jim Laredo, Neelamadhav Gantayat, Jayachandu Bandlamudi, Prerna Agarwal, Sameep Mehta, Renuka Sindhgatta, Ritwik Chaudhuri, and Rohith Vallam